The Replication Crisis in the Age of The Science™

Why so many have so little trust in The Science.

For the last 10 years or so, The Science™ has been taking an awful beating amongst the average layperson. Trust is down across the board in most of our institutions - media, government, public health, and yes - even science. When major institutions lose the trust of the public, there is only one reason, and it’s usually not that the public is ignorant. It’s actually the exact opposite. The main reason for loss of the people’s trust is the same as it is for loss of trust in a marriage, or a friendship, or in a work relationship. It’s because one party has done something (usually repeatedly) to break that trust. Our current government has never had a good track record to begin with, and the whole “pandemic” experience just exacerbated that problem. This shouldn’t be surprising to anyone, and I’ve been yelling into the void about that particular issue for years. You can relax, because right now I really just want to talk about that other thing.

Science is almost single-handedly responsible for our standard of living, and even for the fact that most of us are living at all. It wasn’t too long ago that living to the ripe old age of 45 was quite the accomplishment, not to mention the multitude of technological advances in the last century.

Before we go further, I’d like to clarify that there are in fact two types of science at work here. One is actual science performed in pursuit of the truth using facts and logic, and the other has become known simply as The Science™. This latter is what was used extensively during the pandemic to justify government interventions that real science was unable to justify.

As I said, if trust is broken it’s because someone broke it.

In 2005, John P. A. Ioannidis published his paper, Why Most Published Research Findings Are False. In the first paragraph, he makes this somewhat unnerving statement (emphasis mine):

There is increasing concern that in modern research, false findings may be the majority or even the vast majority of published research claims.

In his summary, Ioannidis makes this claim:

There is increasing concern that most current published research findings are false. The probability that a research claim is true may depend on study power and bias…

Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias.

Accurate measures of the prevailing bias, eh? This was almost 20 years ago. If prevailing bias amongst scientists was a problem then, what do you think it’s like in our current climate of uber-polarization and politicization?

Since then (as one might expect), things have only gotten worse. You can do an internet search on “replication crisis” and you will have reading material for the entire weekend. This, despite the fact that most people have never ever heard of it, and “we’re just following The Science™” has been the mantra since early 2020.

Here is how the National Institutes of Health defines replication:

Replication is one of the key ways scientists build confidence in the scientific merit of results. When the result from one study is found to be consistent by another study, it is more likely to represent a reliable claim to new knowledge.

If a given study can not be replicated, that means there is very little confidence that its findings are accurate. In short, it usually means it’s junk.

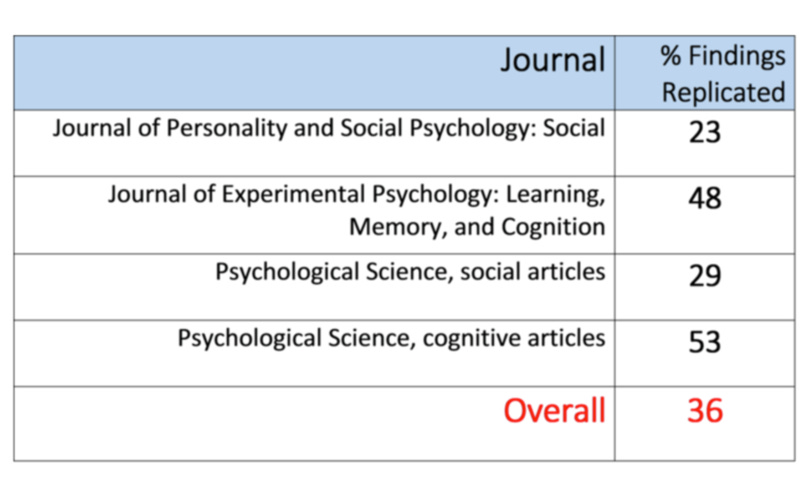

This replication crisis has been studied extensively by many over the last several years and though the actual percentages vary a little bit, what is constant among the findings is that there is a legitimate crisis in the scientific literature. Strangely, while that >50% value (Ioannidis’ “majority of published research claims”) already seems ridiculous, the biggest problem appears to be in psychology, where up to 64% of papers in leading journals are not able to be replicated. That’s huge. And it gets worse from here because the worst of the worst just so happens to be in the social sciences, where only 23% of papers from a reputable journal were replicable. If this sample is at all representative of the whole, this means that 77% of papers in the social sciences are basically crap, and very likely simply accurate measures of the prevailing bias.

But wait - there’s more!

So, it’s one thing to have all these papers floating around out there masquerading as legitimate science because they’re “peer-reviewed”, but that doesn’t mean that anyone actually relies on them, does it? Turns out, research that is less likely to be true is generally cited at a much higher rate than legitimate research. Not only that, but it’s well understood that experts can usually predict fairly well which research will be replicable and which will not, which begs the question: why are scientists citing something that looks sketchy, nevermind journals actually publishing it in the first place?

If there was ever any doubt that “the experts” could spot these bad papers, then a study by Dutch psychologist, Suzanne Hoogeveen ought to put that notion to rest:

Laypeople Can Predict Which Social-Science Studies Will Be Replicated Successfully

Indeed, and apparently with about 60% accuracy, which is considerably better odds than most published research.

This isn’t at all surprising to me. It’s simply that old trait that has evolved with humans over millennia called the bullshit detector. At any rate, it’s always nice to hear the experts say it, and it should be good news to those of us who’ve been told ad nauseum that we should be very careful about stating our informed opinions on matters on which we are not experts.

I think the fact that the biggest offenders are in the social sciences goes a long way to explaining many of the ills that are infecting society in general today. It also makes it easy to understand the workings behind the recent bombshell exposé of the WPATH Files. That’s another story, but it’s an obvious effect of shoddy research being accepted, propagated, and defended despite problems that are obvious even to the layperson.

Another pertinent example of this is the letter that started (or at least gave a big boost to) the opioid crisis in North America.

This “five-sentence letter” appeared in the peer-reviewed New England Journal of Medicine in 1980 and was called Addiction Rare in Patients Treated with Narcotics. It was cited more than 600 times up until 2017. This compares with an average of 11 times for other comparable contemporary letters from that same journal.

This is the letter:

Recently, we examined our current files to determine the incidence of narcotic addiction in 39,946 hospitalized medical patients1 who were monitored consecutively. Although there were 11,882 patients who received at least one narcotic preparation, there were only four cases of reasonably well documented addiction in patients who had no history of addiction. The addiction was considered major in only one instance. The drugs implicated were meperidine in two patients,2 Percodan in one, and hydromorphone in one. We conclude that despite widespread use of narcotic drugs in hospitals, the development of addiction is rare in medical patients with no history of addiction.

Jane Porter

Hershel Jick, M.D.

This was of course, right before the opioid crisis began. I’m not going to get into the particulars (you can read them yourself at the link below) but we can all see the results on the streets in our towns and cities and in the news every evening.

In 2017, the New England Journal of Medicine published another letter, this one called A 1980 Letter on the Risk of Opioid Addiction. It came to this conclusion:

In conclusion, we found that a five-sentence letter published in the Journal in 1980 was heavily and uncritically cited as evidence that addiction was rare with long-term opioid therapy. We believe that this citation pattern contributed to the North American opioid crisis by helping to shape a narrative that allayed prescribers’ concerns about the risk of addiction associated with long-term opioid therapy. In 2007, the manufacturer of OxyContin and three senior executives pleaded guilty to federal criminal charges that they misled regulators, doctors, and patients about the risk of addiction associated with the drug.5 Our findings highlight the potential consequences of inaccurate citation and underscore the need for diligence when citing previously published studies.

This case is a text-book example of what I’m talking about here and shows how much damage can be done be this kind of irresponsible behavior. I don’t know if we’ll ever recover from that one. Remember that it’s easy to spot these things - even ordinary citizens can do it. So, why on earth would anybody - especially a doctor - think that prolonged use of opioids would be safe for anyone who is not under personal care in a hospital? Observant people are asking the exact same questions now about a lot things going on in the medical world, and more often than not, they are shut down. shouted down, or outright fired and cancelled for doing it. That’s another issue, but this issue of trust in medicine and public health is probably way bigger than we know and it will have its own grisly results.

So, if people no longer trust The Science™, now maybe you have a little more understanding as to why that might be. The sooner we get back to doing real science, the sooner that trust can begin to be repaired, but as with all trust issues, that promises to be a long and winding road.

“…good news to those of us who’ve been told ad nauseum that we should be very careful about stating our informed opinions on matters on which we are not experts.”

Excellent post! 👍🏻👍🏻

Another recent post related to this topical thread.

https://reason.com/video/2024/04/02/the-bad-science-behind-jonathan-haidts-anti-social-media-crusade/